An asynchronous debate on AI & copyright with Peter Kyle

Kyle responded to my questions via The Rest Is Politics, but continued to make misleading claims.

A few weeks ago, Peter Kyle, the UK Secretary of State for Science, Innovation & Technology, went on the podcast The Rest Is Politics and was quizzed about his proposals on AI & copyright. I tweeted a response, calling out four claims he made that I thought were misleading.

Yesterday, Alastair Campbell was good enough to relay my questions to him when he paid another visit to the podcast. Unfortunately, he continued to make misleading claims. So I’ve written up his responses below, interspersed with my commentary.

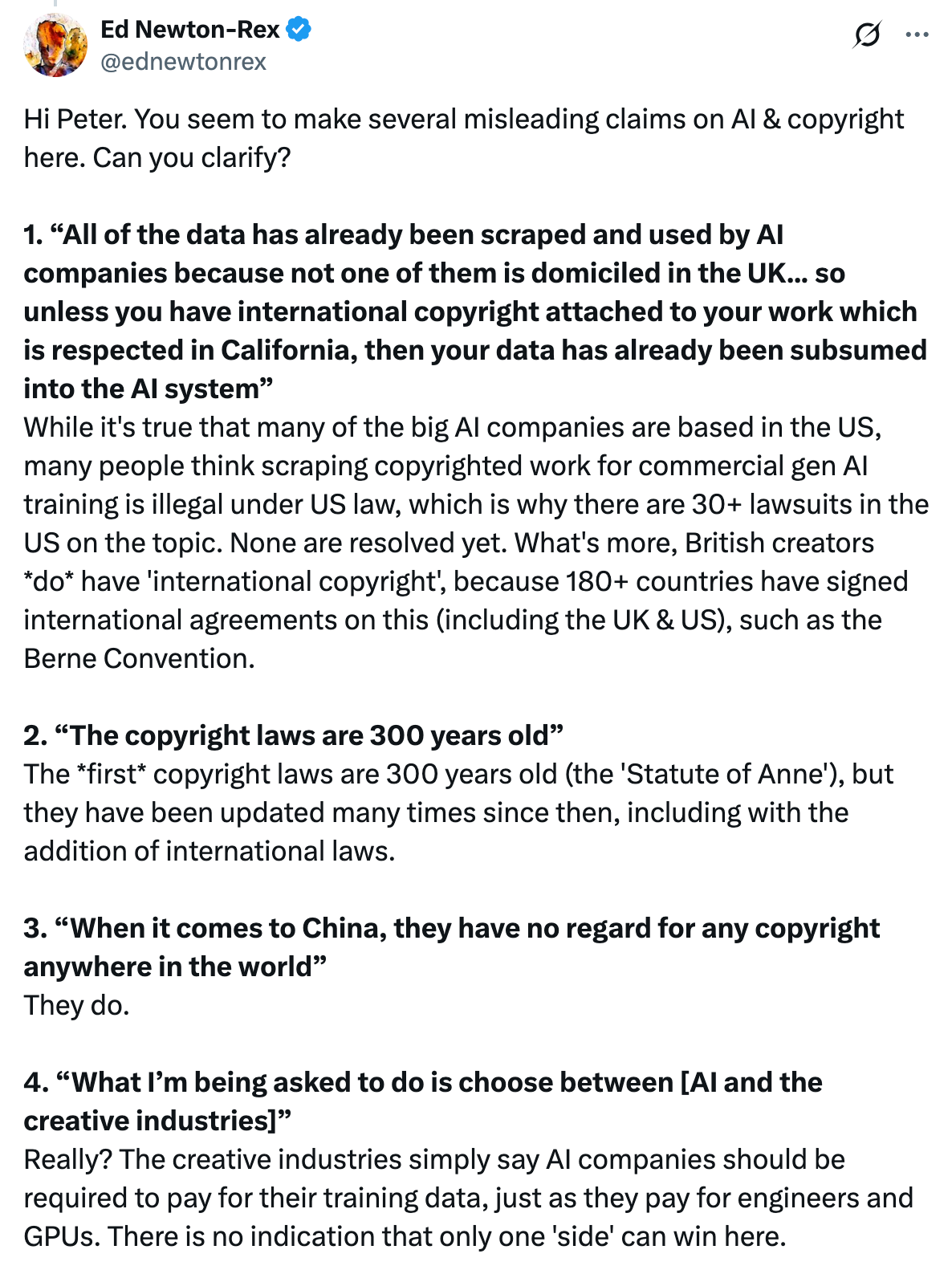

To start, here was my initial tweet:

Below are Peter’s responses - which you can listen to in full here if you’re a The Rest Is Politics member - along with my comments (emphasis mine).

PK: “Firstly on the copyright law and the age of it, I mean, they have been going back 300 years, and the principles remain the same.”

Kyle originally said “the copyright laws are 300 years old” in order to justify needing to change them. While he is right that some of the principles remain the same (authors should get a temporary monopoly over copies of their work), the specifics have changed a great deal since 1710. I don’t see why you would say “the copyright laws are 300 years old” except as a hugely misleading way of suggesting they are outdated.

PK: “We just need to update them for the digital age. And if we carry on with the legislation as it is today, the very fact that so many issues are ending up in courts to be litigated, and it is extremely expensive to litigate, that it means that the law simply isn’t working, simply because if the law was working, then people wouldn’t end up in court all the time - and the only people who are ending up in court are the ones who have representation in very deep pockets - it’s either big creators, who are very successful, or it is people who have representation by companies who have deep pockets on behalf of the people the represent. And that’s just not acceptable, because small content creators aren’t getting the protections they they need under the law as it stands at the moment.”

This is incredibly misleading. There is only one generative AI lawsuit in the UK: Getty Images vs. Stability AI. People in the UK *do not* “end up in court all the time” over this issue, because the law as it stands is clear: training commercial generative AI models on copyrighted work without a licence is illegal. AI companies understand the law, and for the most part respect it.

There *are* lots of generative AI lawsuits in other countries, particularly the US. The law is more open to interpretation there, because of the way it is set up (a ‘fair use’ copyright exception that is judged on a case by case basis), and generative AI companies are taking their chances. But these are not UK lawsuits or UK companies. Kyle says the number of lawsuits shows “the law simply isn’t working”, but he is talking about changing British law, where there is just *one single* lawsuit.

So, to be clear: small content creators are *already* getting the protections they need in the UK. Kyle has recommended changing copyright law in a way that these creators virtually unanimously think would remove these protections.

PK: “When it comes to DeepSeek and China, it is very clear from the way that it is using the English language that it has access to certain bits of material which other large language models don’t. So I think we can infer from that that they are using copyrighted material from the UK and other territories, simply because of the way that it is using the English language. Now that is quite widely accepted now - that’s not just me suppositioning as somebody who’s not a scientist. And also we know that China is using copyrighted material, which is gained by lots of other areas. We want to work with China constructively, but I think it’s widely accepted now that China is using language from other sources as well. And these are things that should really frustrate us.”

Yes, DeepSeek is clearly trained on British copyrighted work. (I was taking issue previously with his suggestion that China “have no regard for copyright anywhere in the world”, which is overly general - they do. But I agree that DeepSeek and other models are trained on copyrighted work without a licence.)

But any decision by China to ignore British creators’ rights should not be met by legalising IP theft here. We should instead hold them accountable. This can be done with recourse to the Berne Convention and similar international treaties on copyright, to which both the UK and China (and the US!) are signatories. A government that is truly frustrated by other countries exploiting our IP, and that truly wanted to protect British creators, would enforce their creators’ rights on the international stage in this way, rather than simply copying unfair policies as soon as they’re implemented in other countries.

PK: “Now, being asked to choose between one or the other, nobody has said to be directly, ‘make a choice’. But the options that are being put in front of me on both sides from certain quarters would mean it would have a detrimental impact on the ability of one or t’other market to thrive. And the people who are creating innovations, whether it be creative or whether it be technological, wouldn’t be able to thrive in this economy in the way that they are thriving in other economies.”

It’s very important to note that many people think that lots of the generative AI training that has happened in the US represents copyright infringement on an enormous scale. Given that the US leads the world in AI, I imagine that Kyle wants us to think of the US when he says “in the way that [people who are creating innovations] are thriving in other economies”. But there is a real chance that the actions of many US AI companies are determined to represent vast copyright infringement. The UK should not second-guess US law before cases in the US are decided.

PK: “So that, by definition, is asking me to make a choice between one or the other. So I realize that nobody’s saying it explicitly, but the implications of what some people are asking for would mean that I would have to make a choice, and I want both markets to thrive - and that means both sectors are going to have to come together in a way that they haven’t before. Because if they’d come together before, we wouldn’t be in a position where a politician has to make the choices.”

This makes out like the creative industries are in some way to blame for the position we’re in. But, to stress again - UK law is currently clear: training commercial generative AI models on copyrighted work without a licence is illegal. Why should the creative industries have ‘come together’ with the AI industry to change this?

The last government attempted to get the creative industries and the AI industry to come to a voluntary agreement that would change copyright law. But, as far as I know, it was the AI companies who walked away from the discussions.

At this point, Rory Stewart asks: “What can you do to protect those content creators? How are they going to make an income in the future?”

PK: “Rory, this is exactly what I am working on. And things have to change in order to have protections in the digital age, in the AI age, in the supercomputer age that lies before us. We can’t stop this happening. So it is going to happen. I mean, look, we could outlaw AI in our country altogether. It wouldn’t stop it being disruptive and coming into Britain from the outside.”

The creative industries are certainly not proposing outlawing AI altogether. But this is still misleading: we *can* make laws that affect what comes into Britain from the outside. Baroness Kidron, in fact, made amendments to the Data (Use and Access) Bill that would have explicitly made British copyright law apply to overseas AI products made available in the UK, which the House of Lords approved - but Kyle’s government removed them.

PK: “So this is the point I’m trying to make, is that I’m trying to find a way that content creators large and small can make a living in the digital age.”

(It goes without saying that Kyle doesn’t actually answer Stewart’s question - he doesn’t say anything he will do to protect content creators, or offer an opinion on how they will make a living in the future.)

PK: “Because at the moment we are moving towards the age where AI is going to disrupt content creation. It is - we can’t stop that. So how can we ensure that in that period of disruption, the people who are creating content can make a living going forward? So there are ways of doing it. And just look at things in the past, people did say that when Google started doing web searches and prioritizing web searches, that that would be the end of content creation. People said the same about Spotify. You remember the enormous row that we had about Napster, and then the move to Spotify, and then the move to Apple Music.”

The Napster / Spotify example is a very odd one to bring up, as it goes against everything Kyle is saying. Napster took a very similar stance to today’s AI companies: they didn’t pay for licenses for the music on their platform; in the US, they argued what they were doing was ‘fair use’. Governments could have legalised this theft, as Kyle proposes doing with AI companies. But instead, they upheld the law. That is *why* Spotify took off - because Spotify was the company that managed to sort out licensing. Spotify paid for music.

Let’s hope that Kyle mirrors this, upholds copyright law, and realises that licensing - which is the current required by law in the UK, and which is all that the creative industries are asking for - is the only solution that is fair to all parties, just as it was at the dawn of music streaming.

PK: “Now I’m not saying all of these things are perfect, and I’m not saying that that transition has been easy, or that it hasn’t disrupted and it hasn’t negatively impacted some creators. But just as the move towards that - and that was Web 2.0, you know, that was the web version that we have at the moment - the next version of the internet is going to be driven and powered by artificial intelligence. It will mean that people can create rather than just choose where they can consume from. And that is going to be disruptive. But it is people desiring the power to do that that is driving this. It is not just people who are running technology companies and creating it that is causing the disruption. It is the desire of everyone to be able to create content, even though sometimes they don’t have the natural ability of Ed Sheeran and Adele and Paul McCartney and creators large and small. So we have to find a way for remuneration.”

Yes, there is clearly demand for generative AI. But we *have* a way to remunerate the creators whose work is used in training: licensing that work. This is *currently required by law in the UK*. The public’s desire for generative AI has nothing to do with this - you can still have generative AI if AI companies license their training data. Several AI companies already do this.

PK: “I don’t have all the solutions, but I have said very specifically that I will not move forward with legislation until I know how we can have remuneration and respect for people’s creations and content when we move into it.”

This is interesting, and worrying, because it is not quite what he has said before. Previously, he has said they need to be sure a solution works for creators - and you can be sure that creators will be very clear as to whether or not a solution works for them. But he is no longer saying that - he is now saying the only bar is some form of remuneration, and a vague promise of “respect for people’s creations and content”, which I doubt will reassure many creators out there.

PK: “And I just reiterate, we’ve closed the consultation; I haven’t drafted a single word of legislation yet, and everybody is acting as if I have. I made some choices and outlined some opinions I had at the time in the consultation - and I know that the opt-out system that I put forward was the thing that’s triggered a lot of this, this sort of, like, really robust debate. But, you know, I’m open minded going forward.”

It’s great if he’s genuinely open-minded - but I don’t think anyone can be surprised about the level of anger at the way the consultation was presented. The government said clearly that it had a preferred option (which involved upending copyright law to favour AI companies). The AI Opportunities Action Plan, whose author Matt Clifford said the government had agreed to implement all its recommendations, included a recommendation to “reform the UK text and data mining regime so that it is at least as competitive as the EU” (which has an opt-out requirement that is unworkable and unfair to creators).

PK: “But I’ve got to say, if the current situation was tenable, then people wouldn’t be complaining about AI in the first place. The fact that content creators large and small, and publishers, are expressing such deep concern about AI, now, shows that the current legislative framework isn’t working and fit for the moment we’re in…”

This is perhaps the most misleading thing he says in the entire interview. Content creators & publishers are not expressing deep concern about the UK’s copyright law. They are concerned about US companies’ actions, absolutely. But they are united in saying that the UK’s copyright law is fit for purpose, and should not be weakened. Concern about AI in general *clearly* does not mean creators are concerned about the UK’s existing copyright framework. Far from it - they want the government to uphold the law, as this is what will protect them.

And as regards overseas companies and legislation, creators should be able to rely on the UK government to enforce international copyright law. Again, the government should be standing up for the UK’s creators via recourse to existing international treaties on copyright, rather than proposing that we weaken copyright law despite their loud and unified objections.

PK: “… and the moment we’re in is going to look fundamentally different in five years’ time. So we have to update legislation, and it has to be respecting both industries, the AI industry and the content creation industry.”

I will end with the observation that Kyle has not said *why* we need to update copyright legislation, other than saying that the current law is untenable partly because creators do not think it is tenable - which is demonstrably false.

The UK’s existing copyright law already respects both industries: in requiring training data be licensed, it lets the AI industry get the training data it needs, while ensuring copyright holders are paid fairly. Why change this?

That’s the end of the Rest Is Politics segment. As I’ve said before, I’d love to debate Kyle in person, and I’d be happy to do so at a time & location of his choosing. He is repeatedly making misleading claims that I would love to have the opportunity to challenge in real time.

Thanks for taking the time to refute all that nonsense. Very well put.

Thanks Ed, very well scrutinised.