Manufacturing 'uncertainty' in order to change copyright law?

UK government representatives keep saying copyright law is uncertain. It's not.

The UK government reportedly wants to introduce a copyright exception that would allow generative AI developers to train their models on copyrighted works without a license, with this their “preferred outcome” to the AI copyright debate - despite it being opposed by many of the world’s creators.

Interestingly, government representatives have started calling existing UK copyright law “uncertain”, as if it somehow doesn’t account for generative AI training, and new law is needed in order to fill some kind of gap in legislation. For instance, in a debate on generative AI in the House of Lords last week, Lord Vallance, the Minister of State for Science, said (emphasis mine):

“Addressing uncertainty about the UK’s copyright framework for AI is a priority for DSIT and DCMS.”

And at the Times Tech Summit in October, Feryal Clark, the Parliamentary Under-Secretary of State for AI and Digital Government, said (emphasis again mine):

“[The government is] working through what we need to do to resolve the issue and to bring clarity to both the AI sector [and] also to creative industries”.

But is there really any uncertainty regarding how to interpret current UK copyright law in the context of generative AI? Is clarity missing?

The answer, of course, is no: in the UK, there is no copyright exception for commercial generative AI training. That is, the law is currently clear: you need to license the data you train on.

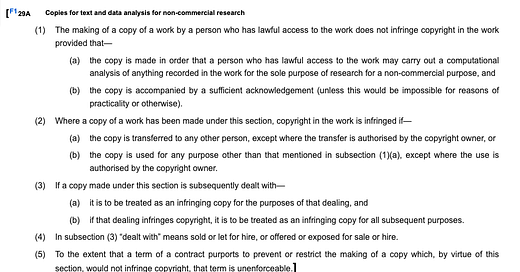

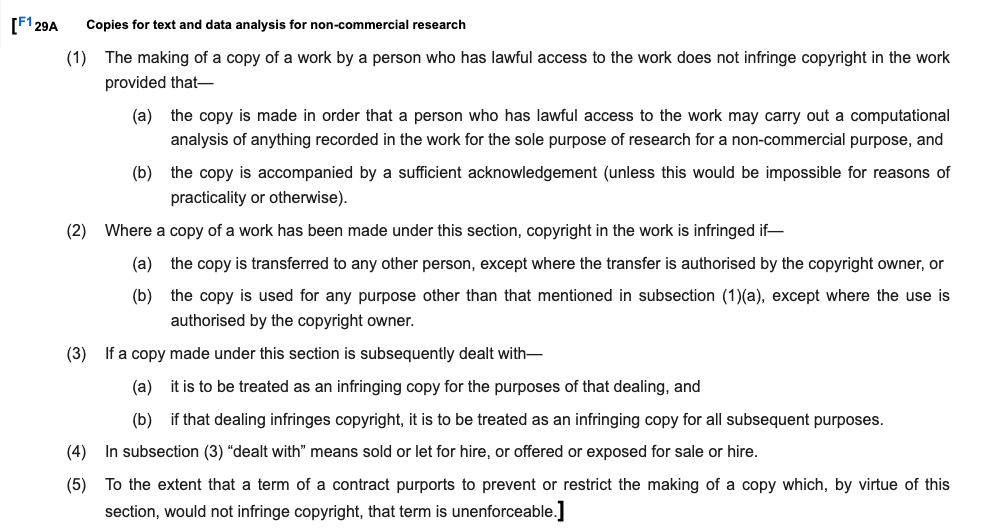

The 1988 Copyright, Designs and Patents Act makes it clear that there is a text data mining (TDM) exception only for non-commercial research (at 29A):

This is widely accepted in legal circles. For instance, when discussing exceptions from liability from copyright infringement in gen AI training, Clifford Chance says “UK law currently permits "text and data analysis" only for non-commercial research (s. 29A, CDPA)”.

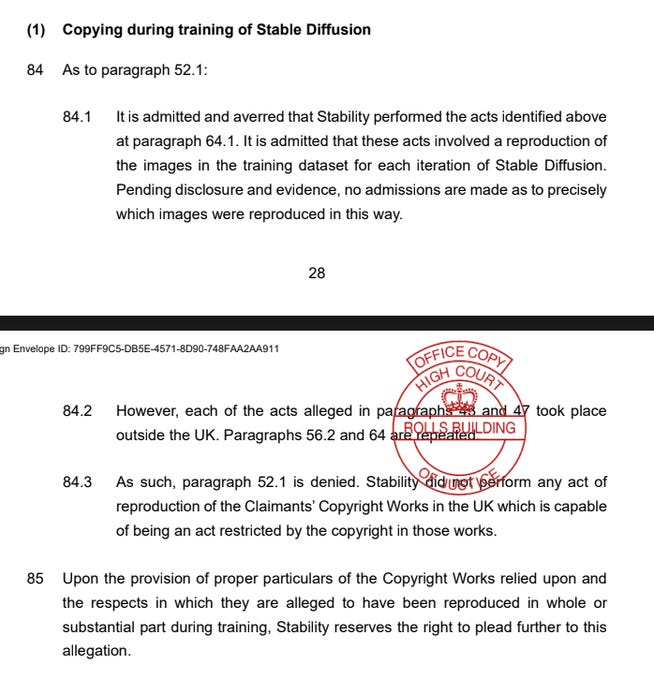

And even British AI companies don't seem to argue it's legal. For instance, in the only UK copyright lawsuit over generative AI training, Getty vs. Stability, the defence made by Stability to the claim over copying during training is simply that they didn’t copy the images in question, or do the training, in the UK; not that doing so would be legal.

Why, then, are government representatives suggesting uncertainty in the law when there is none? It is presumably at least partly due to lobbying from big tech companies. Google’s managing director said in September:

The unresolved copyright issue is a block to development, and a way to unblock that, obviously, from Google’s perspective, is to go back to where I think the government was in 2023 which was TDM being allowed for commercial use.

(To be clear, TDM was not allowed for commercial use in the UK in 2023, and never has been; and the copyright issue is not “unresolved”.)

My fear - and I know it’s a fear many creators and rights holders share - is that manufacturing ‘uncertainty’, when in fact the law is clear, is an intentional tactic to pave the way for changing copyright law. If people become convinced current law is uncertain, they’ll see it as only natural that new law be written, which gives the government the opportunity to make legislation more favourable to AI companies at the expense of the creative industries.

So, if you’re in the UK, as big tech lobbying over this issue comes to a head in the coming months, and as the government launches its consultation on the topic, remember that there’s no uncertainty over what the law is today. Training generative AI models on copyrighted work without a licence is illegal. If the government wants to upend copyright law to benefit AI companies, they should come out and say that, rather than hiding behind the pretence of uncertainty.

Great post Ed.